Curiefense is integrated with Envoy Proxy, the prominent open-source edge and service proxy for cloud-native applications. Initially developed by Lyft and now part of the Cloud Native Computing Foundation landscape, Envoy is designed to create a transparent network and make it easier to troubleshoot problems.

Envoy provides many benefits to modern microservice architectures. In this article, we’ll discuss the motives for its development, a brief overview of its features, some of its most popular use cases, and lastly, what Curiefense adds to it.

Envoy was first announced in 2016 by Matt Klein: “a self contained process that is designed to run alongside every application server. All of the Envoys form a transparent communication mesh in which each application sends and receives messages to and from localhost and is unaware of the network topology.” As a proxy, Envoy represents an application (usually a service) in a network, and the application no longer participates directly in that network; all communication is done through the proxy.

This frees up developers from having to implement a number of networking-related features in their applications, because the proxy has these capabilities built into it.

Envoy’s driving principle is observability. As Matt Klein noted, “The project was born out of the belief that: The network should be transparent to applications. When network and application problems do occur it should be easy to determine the source of the problem.”

Creating a transparent network for cloud-native applications and facilitating troubleshooting is not straightforward. It’s a challenging goal that Envoy achieves via a robust set of features:

These features let you use Envoy to create networking solutions for modern cloud-native applications, a few of which we will cover below.

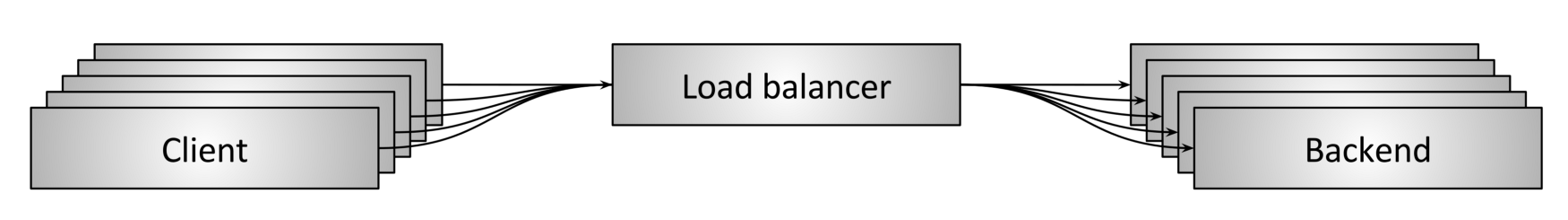

For modern web applications and services, network load balancing is a must. It comes in various forms; perhaps the most familiar topology is the middle proxy, shown here in Figure 1. Clients connect to the load balancer, which distributes the resulting loads across the backend.

Figure 1: Load balancer (Source: Envoy Proxy blog)

A load balancer must perform a number of critical tasks, such as service discovery (finding out what backend services are available to process loads), health checks (monitoring service instances to see which are healthy and have available capacity), and request distribution (deciding how to distribute incoming loads across the available services).

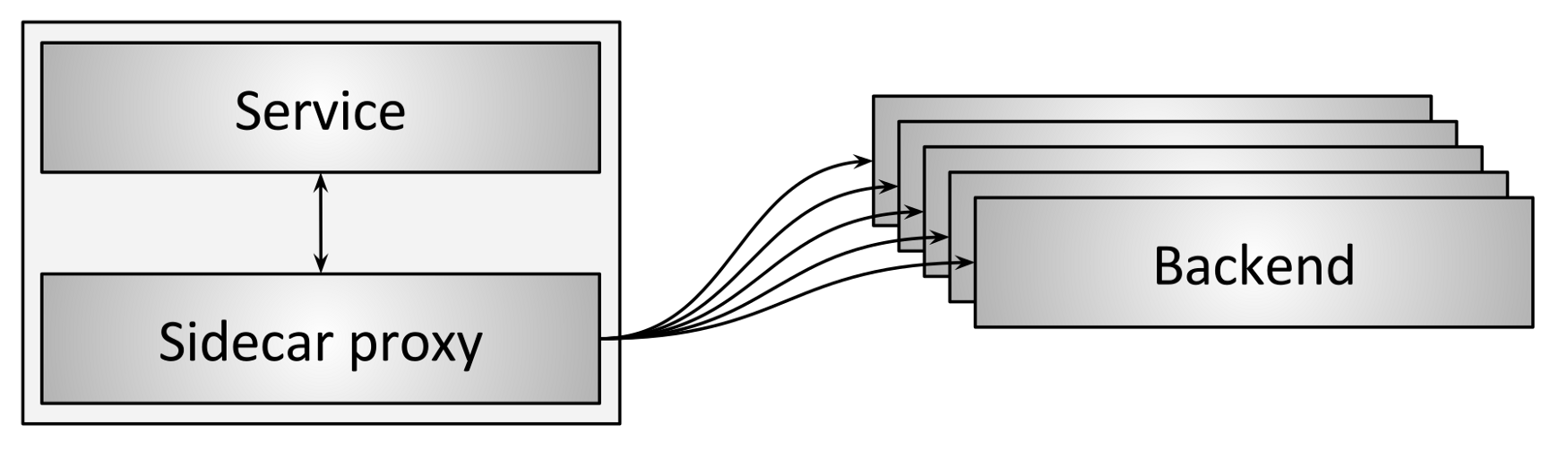

You can use Envoy for L4 load balancing for TCP and UDP connections and L7 load balancing for HTTP connections. In addition, Envoy supports L7 protocol parsing and routing for HTTP/1, HTTP/2, gRPC, Redis, MongoDB, MySQL, Kafka, and DynamoDB. It’s possible to implement Envoy both as a middle proxy similar to Figure 1 or as a sidecar proxy:

Figure 2: Load balancing with sidecars (Source: Envoy Proxy blog)

In this role, Envoy can provide much more than load balancing. When all services are using Envoy sidecar proxies, this creates a service mesh.

The service mesh has become a widely-used architecture in the cloud-native world. It is the communication layer between microservices, where all requests go through the service mesh, making communication between distributed applications more secure and reliable. The services no longer communicate directly with each other; instead, each service communicates only with its Envoy proxy, and the proxies communicate among themselves.

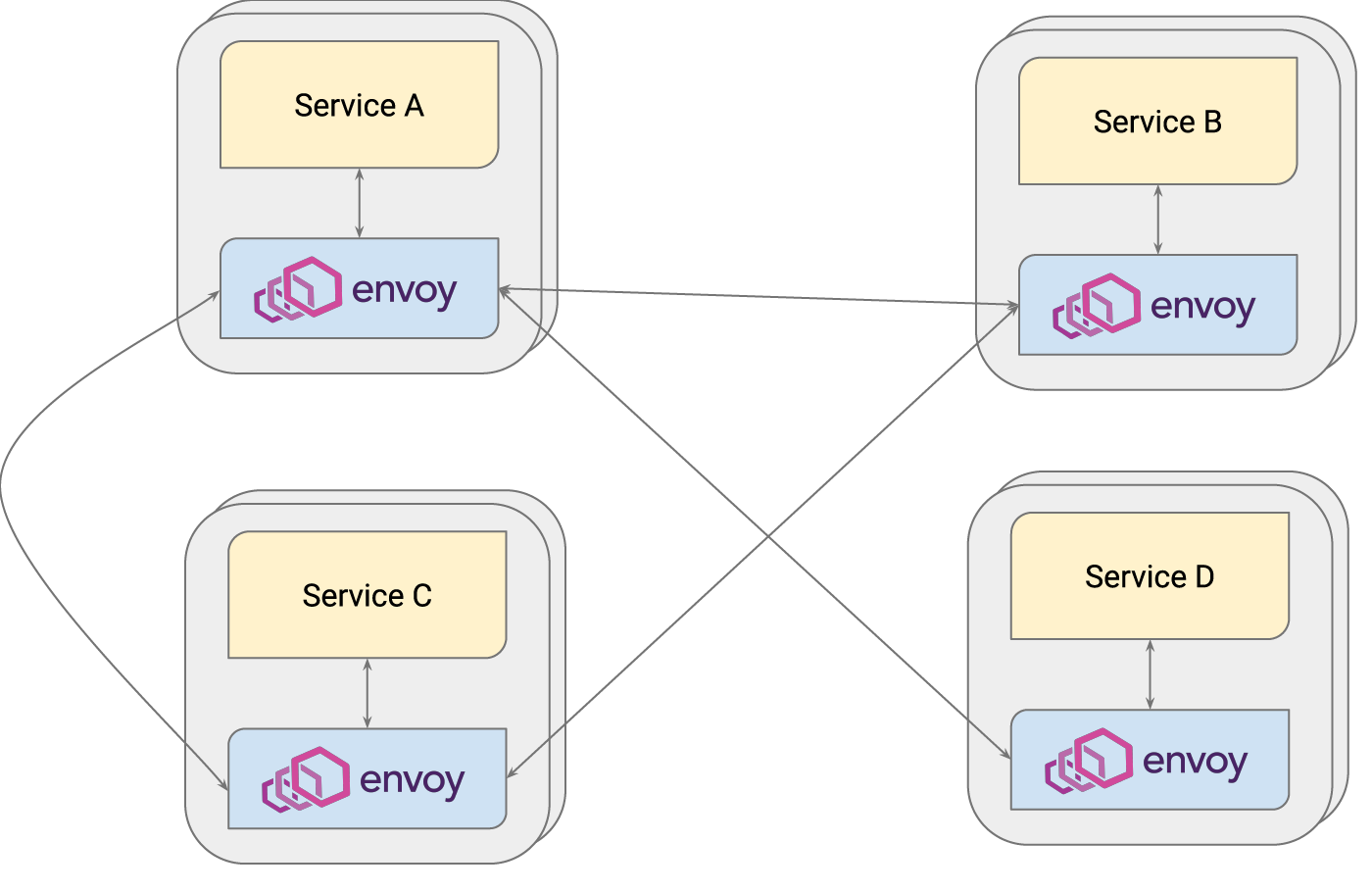

Figure 3: Envoy as a service mesh (Source: Envoy Project Authors)

The four services shown above in Figure 3 have been deployed with Envoy sidecar proxies. All network traffic between the services, including HTTP, gRPC, TCP, and UDP, goes through the proxies. In other words, the service instances are abstracted away from the networking stack, an abstraction that makes it easier to develop and deploy distributed applications. For this and other reasons, there is a high adoption rate of service meshes in the industry.

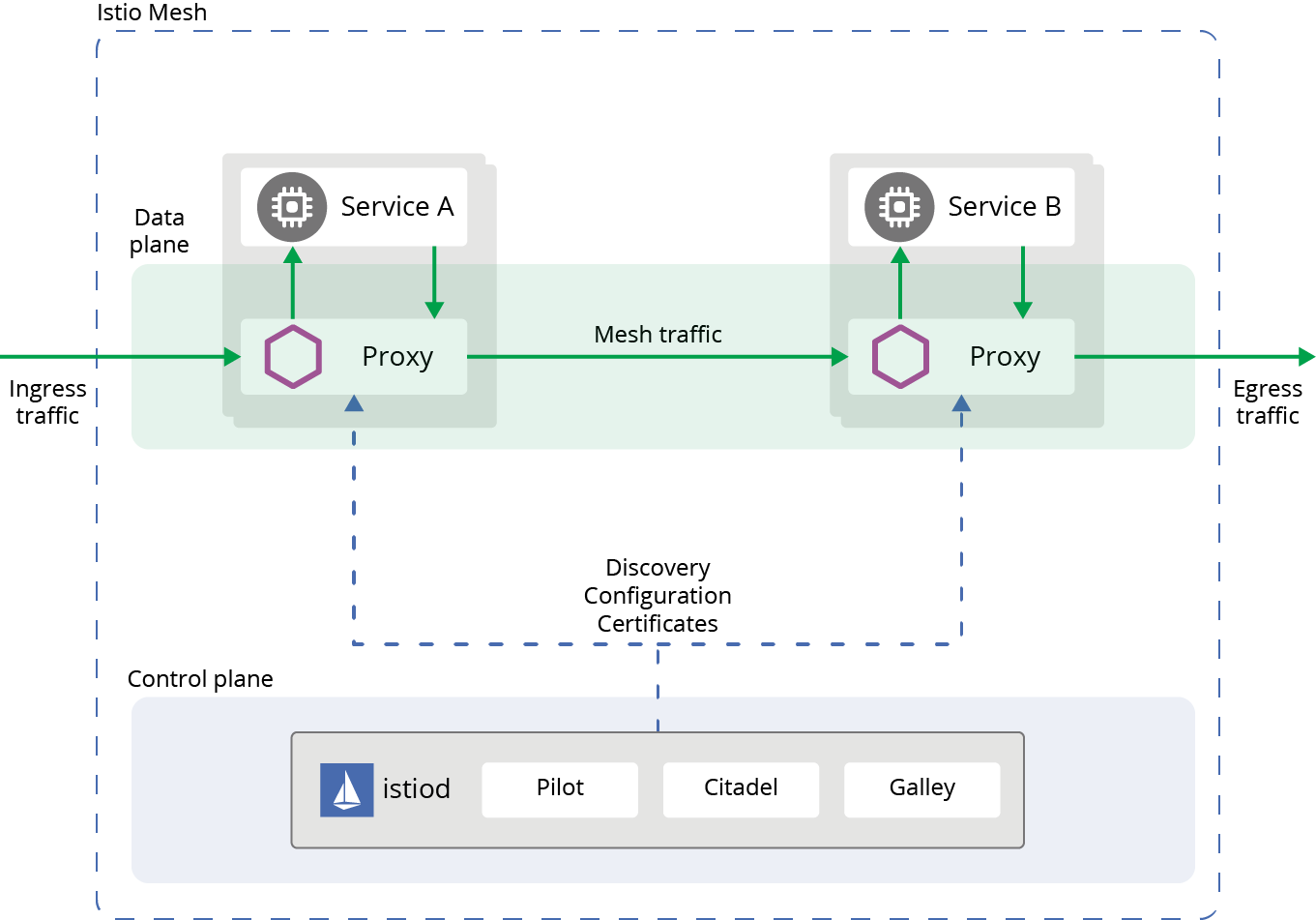

A service mesh setup can be logically divided into two parts: a data plane and a control plane. In the data plane, the actual requests are handled via the following tasks:

In order to run the operations of the data plane, a control plane is necessary, to provide the configuration and policy of all the components in the service mesh. Envoy delivers all the features of the data plane; for the control plane, Istio is a popular choice.

Figure 4: Istio Architecture (Source: Istio Authors)

According to a recent survey by CNCF, nearly 50% of production-grade service meshes are deployed with Istio. So if you’re planning to use Envoy as a part of your service mesh, it’s worthwhile to check out Istio and get familiar with its additional features.

An API gateway is a management layer used to organize and distribute requests to the microservices. With the rise of microservices and cloud deployments, more and more applications use API gateways as their entry point for users and client applications.

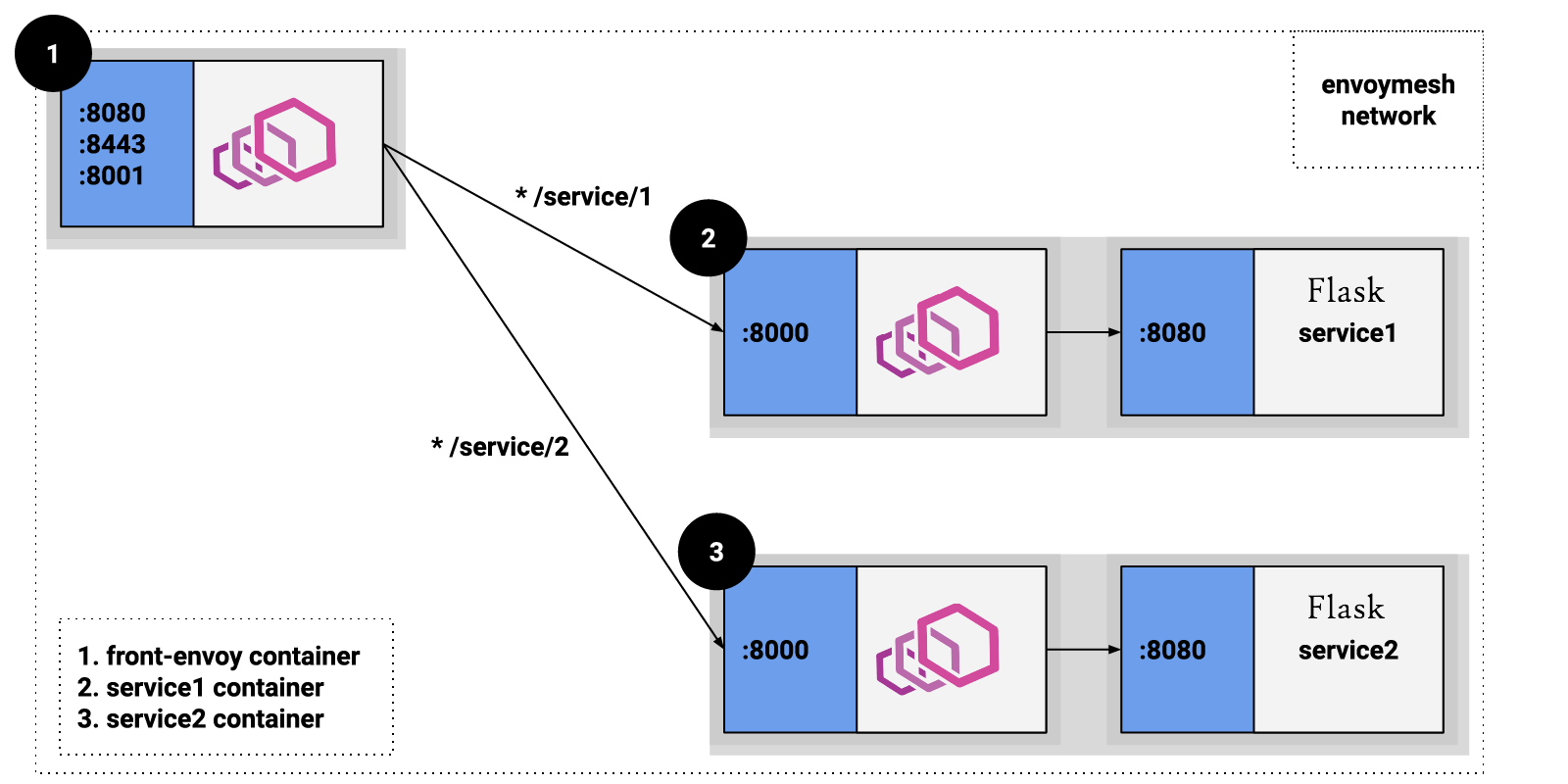

You can use Envoy as an API gateway with its front proxy feature so that it manages the inbound traffic and directs requests to the services in the cluster. For instance, with the following setup, all incoming requests to ports 8080, 8443, and 8001 are managed by Envoy. Requests to the endpoint /service/1 are sent to the sidecar proxy of Envoy in Service 1 and /service/2 to Service 2.

Figure 5: Front proxy deployment (Source: Envoy Project Authors)

Envoy has a rich set of capabilities, but sometimes an unsupported use case will arise. Therefore, it supports new extensions and provides hooks for this purpose. The architecture of Envoy consists of multiple stacks of filters and listeners to be used as extension points.

As Envoy is still in development, there is no high-level documentation for writing custom extensions. However, there are a number of existing extensions that can be studied. To get started on building a custom extension, take a look at the example network filter.

Because of its robust set of features, Envoy is a prominent proxy and networking solution for cloud-native microservices. In this article, we’ve gone through a brief overview of Envoy. Here are some recommended resources to learn more:

Envoy is usually deployed in situations where all the traffic sent to a specific destination (e.g., a microservice, API endpoint, or even an entire network) passes through it. This means it is a natural point for adding traffic filtering. By integrating with Envoy, Curiefense brings built-in web security to the wide variety of possible uses for this popular proxy. Anywhere Envoy can be used, Curiefense can filter traffic and block hostile requests.